7. Transfer learning of Faster RCNN object recognition model using TorchVision

The notebook (exercise) for this section (open in another tab).

Video (27 min)

Explanation of the General Object Recognition Model PDF

Implementation by YOLOv8 is here: by YOLOv8

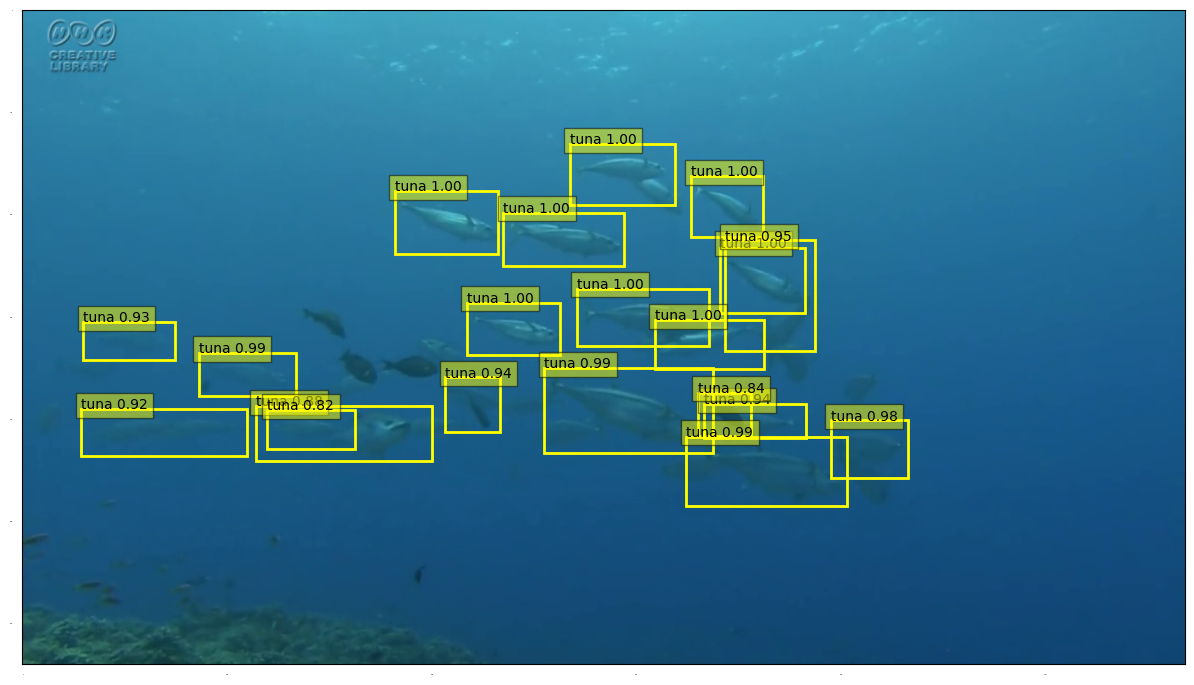

In this notebook, we use underwater tuna images to perform transfer learning of a trained Faster R-CNN model provided by TorchVision, a library in PyTorch.

References:

[4]:

# Import modules

import datetime

import numpy as np

import matplotlib.pyplot as plt

import cv2 # cv2 is an image processing library

[5]:

# Settings for displaying images

plt.rcParams['axes.grid'] = False

plt.rcParams['xtick.labelsize'] = False

plt.rcParams['ytick.labelsize'] = False

plt.rcParams['xtick.top'] = False

plt.rcParams['xtick.bottom'] = False

plt.rcParams['ytick.left'] = False

plt.rcParams['ytick.right'] = False

plt.rcParams['figure.figsize'] = [10, 5]

7.1. Preparing a dataset

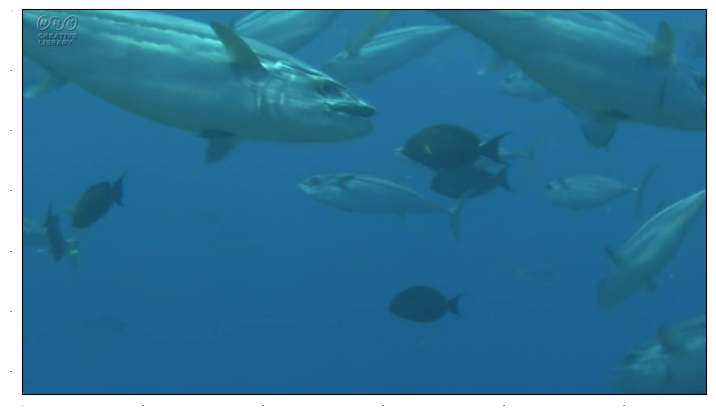

First, download a video of a tuna swimming. This video is from the NHK Creative Library. For the terms of use, please refer to this page.

[6]:

!wget -O tuna.mp4 https://www9.nhk.or.jp/das/movie/D0002031/D0002031658_00000_V_000.mp4

--2025-01-22 05:41:41-- https://www9.nhk.or.jp/das/movie/D0002031/D0002031658_00000_V_000.mp4

Resolving www9.nhk.or.jp (www9.nhk.or.jp)... 202.79.241.203, 101.102.235.203, 202.79.241.44, ...

Connecting to www9.nhk.or.jp (www9.nhk.or.jp)|202.79.241.203|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3628291 (3.5M) [video/mp4]

Saving to: ‘tuna.mp4’

tuna.mp4 100%[===================>] 3.46M 6.13MB/s in 0.6s

2025-01-22 05:41:42 (6.13 MB/s) - ‘tuna.mp4’ saved [3628291/3628291]

Eight frames from the video are converted to a NumPy array and stored as a list images. In this case, the frames are randomly selected, but in practice, it is better to use the frames that are most clearly shown in the video.

[7]:

num_images = 8 # number of images exracted

np.random.seed(0) # Fix the seed of random numbers to reproduce results

# Video capture

vcapture = cv2.VideoCapture('tuna.mp4')

# Number of frames

num_frame = int(vcapture.get(cv2.CAP_PROP_FRAME_COUNT))

# Frame number to be retrieved

frames = np.sort(np.random.choice(num_frame, num_images, replace=False))

print('Frame number:', frames)

# If the output is not [above], run the following

# frames = np.array([198, 395, 511, 708, 885, 922, 1016, 1040])

Frame number: [ 198 395 511 708 885 922 1016 1040]

[8]:

# images: List of NumPy arrays of images

images = []

for frame in frames:

vcapture.set(cv2.CAP_PROP_POS_FRAMES, frame) #frameから読み込み read from "frame"

success, image = vcapture.read()

image = image[...,::-1] # cv2 colors are BGR, so revert to RGB

height, width = image.shape[:2]

# Align the height to 640

height, width = 640, 640 * width // height

image = cv2.resize(image, (width,height))

# Append to images

images.append(image)

# Display images

plt.imshow(image)

plt.show()

Creating Correct Bounding Boxes

Download TensorFlow’s useful functions for creating bounding boxes in Colab from github.

[9]:

!wget "https://raw.githubusercontent.com/tensorflow/models/master/research/object_detection/utils/colab_utils.py"

import colab_utils

--2025-01-22 05:41:46-- https://raw.githubusercontent.com/tensorflow/models/master/research/object_detection/utils/colab_utils.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 19472 (19K) [text/plain]

Saving to: ‘colab_utils.py’

colab_utils.py 100%[===================>] 19.02K --.-KB/s in 0s

2025-01-22 05:41:46 (90.3 MB/s) - ‘colab_utils.py’ saved [19472/19472]

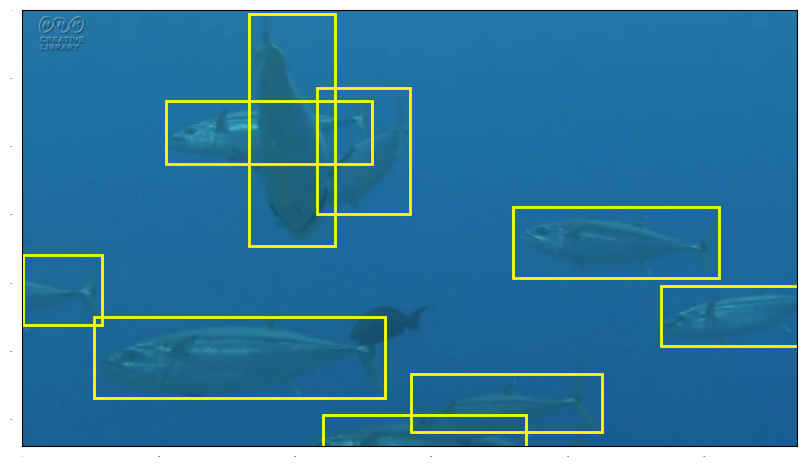

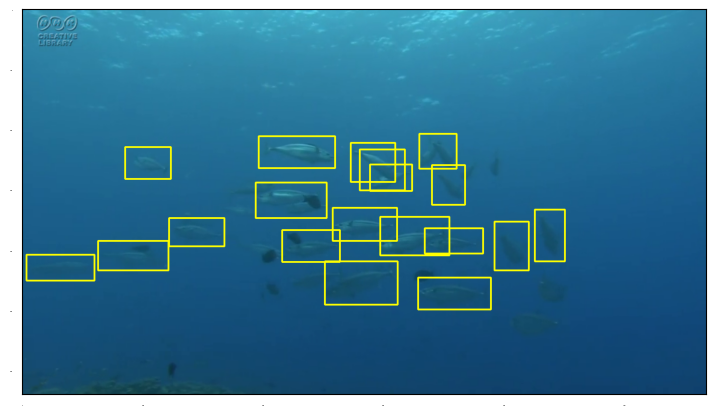

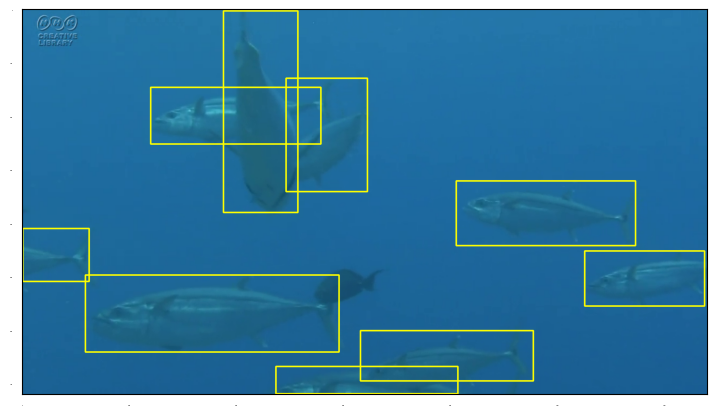

Creating bounding boxes for tunas

Drag on the image to create bounding boxes around tunas, then click the 「submit」 and 「next image」 buttons.

Note: In this task, there are only two classes of objects: tuna and other. If you want to distinguish between objects of multiple classes, you must generate separate bounding boxes for them.

[10]:

gt_boxes = []

colab_utils.annotate(images, box_storage_pointer=gt_boxes)

'--boxes array populated--'

'--boxes array populated--'

'--boxes array populated--'

'--boxes array populated--'

'--boxes array populated--'

The created gt_boxes is a list of NumPy arrays of type (number of boxes, 4), where each row is

[ymin,xmin,ymax,xmax]

Each number is a relative position of a 1.0 x 1.0 square of the whole image.

[11]:

gt_boxes

[11]:

[]

Run the following cell if you don’t want to do it yourself.

[33]:

#gt_boxes will be overwritten if this cell is executed.

gt_boxes = [

np.array([[7.73333549e-03, 2.91996482e-01, 5.37733335e-01, 4.01934916e-01],

[2.07733335e-01, 1.84696570e-01, 3.51066669e-01, 4.50307828e-01],

[1.77733335e-01, 3.79947230e-01, 4.66066669e-01, 4.98680739e-01],

[4.51066669e-01, 6.32365875e-01, 6.11066669e-01, 8.97097625e-01],

[7.02733335e-01, 9.23482850e-02, 8.86066669e-01, 4.66138962e-01],

[5.61079543e-01, 8.79507476e-04, 7.19412877e-01, 1.02022867e-01],

[6.32746210e-01, 8.24098505e-01, 7.67746210e-01, 1.00000000e+00],

[9.27746210e-01, 3.87862797e-01, 9.99412877e-01, 6.48197010e-01],

[8.34412877e-01, 5.00439754e-01, 9.64412877e-01, 7.46701847e-01]]),

np.array([[0.25441288, 0.16622691, 0.35441288, 0.34652595],

[0.41774621, 0.00879507, 0.54107954, 0.21987687],

[0.40274621, 0.14423923, 0.63107954, 0.47141601],

[0.38774621, 0.25769569, 0.63607954, 0.66314864],

[0.28941288, 0.66226913, 0.60941288, 0.87247142],

[0.63441288, 0.80914688, 0.76441288, 0.99912049],

[0.71441288, 0.24538259, 0.92441288, 0.42832014],

[0.88941288, 0.23658751, 0.99607954, 0.46701847]]),

np.array([[0.25274621, 0. , 0.36607954, 0.19085312],

[0.25441288, 0.2823219 , 0.36941288, 0.51187335],

[0.39274621, 0.03869833, 0.65941288, 0.61477573],

[0.47107954, 0.52594547, 0.69607954, 0.88566403],

[0.68441288, 0.75373791, 0.88441288, 0.98592788]]),

np.array([[0.25274621, 0.15655233, 0.40441288, 0.52418646],

[0.40441288, 0.39753738, 0.60774621, 0.65435356],

[0.40274621, 0.76077397, 0.54274621, 0.92612137],

[0.44941288, 0.83377309, 0.78107954, 0.99736148],

[0.79274621, 0.86455585, 0.99441288, 0.99824099]]),

np.array([[0.25441288, 0.32014072, 0.29774621, 0.38346526],

[0.25607954, 0.3878628 , 0.29941288, 0.46437995],

[0.29274621, 0.39841689, 0.34274621, 0.49868074],

[0.27774621, 0.54265611, 0.36774621, 0.63324538],

[0.31607954, 0.64643799, 0.42774621, 0.69305189],

[0.41774621, 0.61301671, 0.47107954, 0.69832894],

[0.39607954, 0.57519789, 0.46274621, 0.63588391],

[0.44441288, 0.58399296, 0.54607954, 0.67194371],

[0.48441288, 0.69041337, 0.55774621, 0.73526825],

[0.58274621, 0.73702726, 0.66607954, 0.77572559],

[0.57274621, 0.64995602, 0.61107954, 0.72647318],

[0.56107954, 0.61565523, 0.67941288, 0.7299912 ],

[0.63607954, 0.58487247, 0.68107954, 0.66842568],

[0.60107954, 0.48988566, 0.68441288, 0.60598065],

[0.57607954, 0.5180299 , 0.60441288, 0.59806508],

[0.42274621, 0.3649956 , 0.56607954, 0.49868074],

[0.38774621, 0.43887423, 0.46274621, 0.54177661],

[0.38441288, 0.35708004, 0.44441288, 0.43007916],

[0.56607954, 0.21811785, 0.60774621, 0.2823219 ],

[0.56107954, 0.17502199, 0.61274621, 0.22691293],

[0.52941288, 0.07475814, 0.58441288, 0.16534741],

[0.43607954, 0.10905893, 0.50774621, 0.19525066],

[0.47107954, 0.33861038, 0.52107954, 0.38698329]]),

np.array([[0.27607954, 0.32717678, 0.36774621, 0.41248901],

[0.32441288, 0.43271768, 0.38274621, 0.52066843],

[0.25441288, 0.53034301, 0.32607954, 0.57783641],

[0.26941288, 0.57871592, 0.34441288, 0.64028144],

[0.36774621, 0.60422164, 0.45941288, 0.68161829],

[0.42274621, 0.62796834, 0.53607954, 0.68337731],

[0.50774621, 0.64291996, 0.57774621, 0.68601583],

[0.54107954, 0.70360598, 0.60941288, 0.74230431],

[0.63441288, 0.69305189, 0.70774621, 0.76077397],

[0.67607954, 0.70360598, 0.76107954, 0.75989446],

[0.66107954, 0.59014952, 0.75941288, 0.70360598],

[0.59441288, 0.5760774 , 0.65774621, 0.67370273],

[0.53441288, 0.45030783, 0.66941288, 0.58487247],

[0.43107954, 0.4819701 , 0.50441288, 0.59894459],

[0.46441288, 0.54177661, 0.53274621, 0.62884785],

[0.44774621, 0.38170624, 0.52107954, 0.46437995],

[0.48774621, 0.34036939, 0.55774621, 0.40369393],

[0.59941288, 0.26121372, 0.68441288, 0.33421284],

[0.62607954, 0.45030783, 0.67941288, 0.50307828],

[0.56107954, 0.35532102, 0.63607954, 0.46086192],

[0.52607954, 0.16007036, 0.59274621, 0.235708 ],

[0.60607954, 0.12137203, 0.66607954, 0.1882146 ],

[0.60941288, 0.05980651, 0.67441288, 0.12313105],

[0.52107954, 0.01319261, 0.57774621, 0.09234828],

[0.60941288, 0.21899736, 0.67274621, 0.28496042]]),

np.array([[0.35607954, 0.11433597, 0.45441288, 0.19788918],

[0.28607954, 0.2348285 , 0.38441288, 0.34476693],

[0.28107954, 0.38610378, 0.40941288, 0.47229551],

[0.26774621, 0.50043975, 0.37441288, 0.56992084],

[0.35607954, 0.48460862, 0.42774621, 0.5408971 ],

[0.36607954, 0.52682498, 0.45274621, 0.57783641],

[0.31107954, 0.58751099, 0.41441288, 0.63940193],

[0.41941288, 0.64643799, 0.58607954, 0.71328056],

[0.48441288, 0.55936675, 0.60274621, 0.6473175 ],

[0.50774621, 0.5171504 , 0.60441288, 0.59894459],

[0.61774621, 0.64116095, 0.72441288, 0.70888303],

[0.66774621, 0.56904134, 0.74441288, 0.65699208],

[0.61274621, 0.43711522, 0.71607954, 0.54969217],

[0.48607954, 0.45030783, 0.57107954, 0.53210202],

[0.55441288, 0.36323659, 0.63607954, 0.46086192],

[0.42274621, 0.32365875, 0.49774621, 0.43095866],

[0.46107954, 0.37291117, 0.51107954, 0.44327177],

[0.43607954, 0.17326297, 0.50274621, 0.26561126],

[0.52441288, 0.19349164, 0.61941288, 0.29639402]]),

np.array([[0.35607954, 0.14863676, 0.44107954, 0.21899736],

[0.32941288, 0.34564644, 0.41441288, 0.45822339],

[0.34441288, 0.4819701 , 0.45274621, 0.54969217],

[0.39607954, 0.51011434, 0.47107954, 0.56904134],

[0.32941288, 0.59630607, 0.42274621, 0.63764292],

[0.32107954, 0.58223395, 0.41107954, 0.62005277],

[0.40274621, 0.59806508, 0.51107954, 0.64643799],

[0.51774621, 0.75021988, 0.65774621, 0.79419525],

[0.55107954, 0.69129288, 0.67941288, 0.74318382],

[0.56607954, 0.59102902, 0.63441288, 0.67458223],

[0.53607954, 0.52418646, 0.64107954, 0.62445031],

[0.51274621, 0.45470536, 0.60274621, 0.54793316],

[0.65441288, 0.44327177, 0.76607954, 0.54969217],

[0.69441288, 0.58135444, 0.78607954, 0.69041337],

[0.57441288, 0.37994723, 0.65607954, 0.46525945],

[0.44941288, 0.34212841, 0.54441288, 0.44766931],

[0.54274621, 0.21723835, 0.61941288, 0.29639402],

[0.60107954, 0.11257696, 0.67941288, 0.21459982],

[0.63774621, 0.00351803, 0.70607954, 0.10905893]])]

Let’s overlay an image and a rectangle.

[34]:

# A function to display an image and a rectangle simultaneously

import matplotlib.pyplot as plt

def image_box(idx):

img = images[idx]

height, width = img.shape[:2]

boxes = gt_boxes[idx]

plt.figure(figsize=(10, 10))

plt.imshow(img)

# Drawing rectangles

currentAxis = plt.gca()

for _, box in enumerate(boxes):

ymin, xmin, ymax, xmax = box * np.array([height,width,height,width])

coords = (xmin, ymin), xmax-xmin+1, ymax-ymin+1

currentAxis.add_patch(plt.Rectangle(*coords, fill=False, edgecolor='yellow', linewidth=2))

plt.show()

[35]:

image_box(0)

Preparing the dataset class

Rewrite the constructor of the class torch.utils.data.Dataset and the two methods __len__ and __getitem__ to fit our dataset.

Define __getitem__ to return the following items.

image: a PIL Image of size (H, W)

target: a dict containing the following fields

boxes(FloatTensor[N, 4]): the coordinates of theNbounding boxes in[x0, y0, x1, y1]format, ranging from0toWand0toHlabels(Int64Tensor[N]): the label for each bounding boximage_id(Int64Tensor[1]): an image identifier.area(Tensor[N]): The area of the bounding box. This is used during evaluation with the COCO metric, to separate the metric scores between small, medium and large boxes.iscrowd(UInt8Tensor[N]): instances withiscrowd=Truewill be ignored during evaluation.

[36]:

import os

import numpy as np

import torch

import torch.utils.data

from PIL import Image

class TunaDataset(torch.utils.data.Dataset):

def __init__(self, images, gt_boxes, transforms=None):

self.imgs = images

self.annotations = gt_boxes

self.transforms = transforms

def __getitem__(self, idx):

img = self.imgs[idx]

height, width = img.shape[:2]

# Restore the boxes that are standardized to [0,1].

boxes = self.annotations[idx] * np.array([height, width, height, width])

boxes = boxes[:,[1, 0, 3, 2]] # y0 x0 y1 x1 -> x0 y0 x1 y1

num_objs = len(boxes)

# Convert to PIL for using torchvisoin

img = Image.fromarray(img)

# Convert boxes to a Torch tensor

boxes = torch.as_tensor(boxes, dtype=torch.float32)

# Class labels: there is only one now

labels = torch.ones((num_objs,), dtype=torch.int64)

image_id = torch.tensor([idx])

area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])

# Whether or not the objects overlap: Assumed not now.

iscrowd = torch.zeros((num_objs,), dtype=torch.int64)

# Create a dictionary of correct data

target = {}

target["boxes"] = boxes

target["labels"] = labels

target["image_id"] = image_id

target["area"] = area

target["iscrowd"] = iscrowd

# Data augmentation

if self.transforms is not None:

img, target = self.transforms(img, target)

return img, target # images and bounding boxes

def __len__(self):

return len(self.imgs)

To see how this dataset class works, let’s apply it to the first few datasets, where transforms are not used.

As shown below, dataset[i] outputs PIL image and annotation data in dictionary format for the \(i\)th image.

[37]:

dataset = TunaDataset(images, gt_boxes,)

for i in range(8):

print(i)

print(dataset[i])

plt.imshow(dataset[i][0])

plt.show()

0

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DE0F62850>, {'boxes': tensor([[3.3200e+02, 4.9493e+00, 4.5700e+02, 3.4415e+02],

[2.1000e+02, 1.3295e+02, 5.1200e+02, 2.2468e+02],

[4.3200e+02, 1.1375e+02, 5.6700e+02, 2.9828e+02],

[7.1900e+02, 2.8868e+02, 1.0200e+03, 3.9108e+02],

[1.0500e+02, 4.4975e+02, 5.3000e+02, 5.6708e+02],

[1.0000e+00, 3.5909e+02, 1.1600e+02, 4.6042e+02],

[9.3700e+02, 4.0496e+02, 1.1370e+03, 4.9136e+02],

[4.4100e+02, 5.9376e+02, 7.3700e+02, 6.3962e+02],

[5.6900e+02, 5.3402e+02, 8.4900e+02, 6.1722e+02]]), 'labels': tensor([1, 1, 1, 1, 1, 1, 1, 1, 1]), 'image_id': tensor([0]), 'area': tensor([42399.9961, 27703.4629, 24911.9980, 30822.3984, 49866.6562, 11653.3350,

17279.9980, 13576.5430, 23296.0039]), 'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0])})

1

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DDA6B0A90>, {'boxes': tensor([[ 189.0000, 162.8242, 394.0000, 226.8242],

[ 10.0000, 267.3576, 250.0000, 346.2909],

[ 164.0000, 257.7576, 536.0000, 403.8909],

[ 293.0000, 248.1576, 754.0000, 407.0909],

[ 753.0000, 185.2242, 992.0000, 390.0242],

[ 920.0000, 406.0242, 1136.0000, 489.2242],

[ 279.0000, 457.2242, 487.0000, 591.6243],

[ 269.0000, 569.2242, 531.0000, 637.4909]]), 'labels': tensor([1, 1, 1, 1, 1, 1, 1, 1]), 'image_id': tensor([1]), 'area': tensor([13120.0000, 18943.9961, 54361.5977, 73268.2656, 48947.1953, 17971.2031,

27955.2051, 17885.8652]), 'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0])})

2

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DE0627A90>, {'boxes': tensor([[ 0.0000, 161.7576, 217.0000, 234.2909],

[ 321.0000, 162.8242, 582.0000, 236.4242],

[ 44.0000, 251.3576, 699.0000, 422.0242],

[ 598.0000, 301.4909, 1007.0000, 445.4909],

[ 857.0000, 438.0242, 1121.0000, 566.0242]]), 'labels': tensor([1, 1, 1, 1, 1]), 'image_id': tensor([2]), 'area': tensor([ 15739.7354, 19209.5977, 111786.6562, 58896.0000, 33792.0000]), 'iscrowd': tensor([0, 0, 0, 0, 0])})

3

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DE064D250>, {'boxes': tensor([[ 178.0000, 161.7576, 596.0000, 258.8242],

[ 452.0000, 258.8242, 744.0000, 388.9576],

[ 865.0000, 257.7576, 1053.0000, 347.3576],

[ 948.0000, 287.6242, 1134.0000, 499.8909],

[ 983.0000, 507.3576, 1135.0000, 636.4243]]), 'labels': tensor([1, 1, 1, 1, 1]), 'image_id': tensor([3]), 'area': tensor([40573.8711, 37998.9336, 16844.8008, 39481.5977, 19618.1348]), 'iscrowd': tensor([0, 0, 0, 0, 0])})

4

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DDA66EE10>, {'boxes': tensor([[364.0000, 162.8242, 436.0000, 190.5576],

[441.0000, 163.8909, 528.0000, 191.6242],

[453.0000, 187.3576, 567.0000, 219.3576],

[617.0000, 177.7576, 720.0000, 235.3576],

[735.0000, 202.2909, 788.0000, 273.7576],

[697.0000, 267.3576, 794.0000, 301.4909],

[654.0000, 253.4909, 723.0000, 296.1576],

[664.0000, 284.4243, 764.0000, 349.4909],

[785.0000, 310.0242, 836.0000, 356.9576],

[838.0000, 372.9576, 882.0000, 426.2909],

[739.0000, 366.5576, 826.0000, 391.0909],

[700.0000, 359.0909, 830.0000, 434.8242],

[665.0000, 407.0909, 760.0000, 435.8909],

[557.0000, 384.6909, 689.0000, 438.0242],

[589.0000, 368.6909, 680.0000, 386.8242],

[415.0000, 270.5576, 567.0000, 362.2909],

[499.0000, 248.1576, 616.0000, 296.1576],

[406.0000, 246.0242, 489.0000, 284.4243],

[248.0000, 362.2909, 321.0000, 388.9576],

[199.0000, 359.0909, 258.0000, 392.1576],

[ 85.0000, 338.8242, 188.0000, 374.0242],

[124.0000, 279.0909, 222.0000, 324.9576],

[385.0000, 301.4909, 440.0000, 333.4909]]), 'labels': tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]), 'image_id': tensor([4]), 'area': tensor([ 1996.7992, 2412.8003, 3648.0000, 5932.8008, 3787.7329, 3310.9331,

2943.9993, 6506.6650, 2393.6008, 2346.6658, 2134.3994, 9845.3340,

2735.9988, 7039.9971, 1650.1332, 13943.4629, 5615.9980, 3187.2007,

1946.6682, 1950.9324, 3625.5979, 4494.9336, 1760.0000]), 'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0])})

5

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DDA734CD0>, {'boxes': tensor([[372.0000, 176.6909, 469.0000, 235.3576],

[492.0000, 207.6242, 592.0000, 244.9576],

[603.0000, 162.8242, 657.0000, 208.6909],

[658.0000, 172.4242, 728.0000, 220.4242],

[687.0000, 235.3576, 775.0000, 294.0242],

[714.0000, 270.5576, 777.0000, 343.0909],

[731.0000, 324.9576, 780.0000, 369.7576],

[800.0000, 346.2909, 844.0000, 390.0242],

[788.0000, 406.0242, 865.0000, 452.9576],

[800.0000, 432.6909, 864.0000, 487.0909],

[671.0000, 423.0909, 800.0000, 486.0242],

[655.0000, 380.4243, 766.0000, 420.9576],

[512.0000, 342.0242, 665.0000, 428.4243],

[548.0000, 275.8909, 681.0000, 322.8242],

[616.0000, 297.2242, 715.0000, 340.9576],

[434.0000, 286.5576, 528.0000, 333.4909],

[387.0000, 312.1576, 459.0000, 356.9576],

[297.0000, 383.6242, 380.0000, 438.0242],

[512.0000, 400.6909, 572.0000, 434.8242],

[404.0000, 359.0909, 524.0000, 407.0909],

[182.0000, 336.6909, 268.0000, 379.3576],

[138.0000, 387.8909, 214.0000, 426.2909],

[ 68.0000, 390.0242, 140.0000, 431.6242],

[ 15.0000, 333.4909, 105.0000, 369.7576],

[249.0000, 390.0242, 324.0000, 430.5576]]), 'labels': tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1]), 'image_id': tensor([5]), 'area': tensor([ 5690.6670, 3733.3345, 2476.7993, 3360.0000, 5162.6660, 4569.5996,

2195.1995, 1924.2668, 3613.8679, 3481.5996, 8118.3979, 4499.1992,

13219.2041, 6242.1357, 4329.6006, 4411.7319, 3225.6013, 4515.1997,

2047.9999, 5760.0000, 3669.3325, 2918.3994, 2995.2004, 3263.9993,

3040.0017]), 'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0])})

6

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DE0F296D0>, {'boxes': tensor([[130.0000, 227.8909, 225.0000, 290.8242],

[267.0000, 183.0909, 392.0000, 246.0242],

[439.0000, 179.8909, 537.0000, 262.0242],

[569.0000, 171.3576, 648.0000, 239.6242],

[551.0000, 227.8909, 615.0000, 273.7576],

[599.0000, 234.2909, 657.0000, 289.7576],

[668.0000, 199.0909, 727.0000, 265.2242],

[735.0000, 268.4243, 811.0000, 375.0909],

[636.0000, 310.0242, 736.0000, 385.7576],

[588.0000, 324.9576, 681.0000, 386.8242],

[729.0000, 395.3576, 806.0000, 463.6242],

[647.0000, 427.3576, 747.0000, 476.4243],

[497.0000, 392.1576, 625.0000, 458.2909],

[512.0000, 311.0909, 605.0000, 365.4909],

[413.0000, 354.8242, 524.0000, 407.0909],

[368.0000, 270.5576, 490.0000, 318.5576],

[424.0000, 295.0909, 504.0000, 327.0909],

[197.0000, 279.0909, 302.0000, 321.7576],

[220.0000, 335.6242, 337.0000, 396.4243]]), 'labels': tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]), 'image_id': tensor([6]), 'area': tensor([5978.6680, 7866.6670, 8049.0664, 5393.0664, 2935.4668, 3217.0662,

3901.8665, 8106.6660, 7573.3340, 5753.6001, 5256.5332, 4906.6680,

8465.0664, 5059.1992, 5801.5996, 5856.0000, 2560.0000, 4479.9990,

7113.6021]), 'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0])})

7

(<PIL.Image.Image image mode=RGB size=1137x640 at 0x783DE1AE9AD0>, {'boxes': tensor([[169.0000, 227.8909, 249.0000, 282.2909],

[393.0000, 210.8242, 521.0000, 265.2242],

[548.0000, 220.4242, 625.0000, 289.7576],

[580.0000, 253.4909, 647.0000, 301.4909],

[678.0000, 210.8242, 725.0000, 270.5576],

[662.0000, 205.4909, 705.0000, 263.0909],

[680.0000, 257.7576, 735.0000, 327.0909],

[853.0000, 331.3576, 903.0000, 420.9576],

[786.0000, 352.6909, 845.0000, 434.8242],

[672.0000, 362.2909, 767.0000, 406.0242],

[596.0000, 343.0909, 710.0000, 410.2909],

[517.0000, 328.1576, 623.0000, 385.7576],

[504.0000, 418.8242, 625.0000, 490.2909],

[661.0000, 444.4243, 785.0000, 503.0909],

[432.0000, 367.6242, 529.0000, 419.8909],

[389.0000, 287.6242, 509.0000, 348.4243],

[247.0000, 347.3576, 337.0000, 396.4243],

[128.0000, 384.6909, 244.0000, 434.8242],

[ 4.0000, 408.1576, 124.0000, 451.8909]]), 'labels': tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]), 'image_id': tensor([7]), 'area': tensor([4351.9995, 6963.1992, 5338.6665, 3216.0000, 2807.4668, 2476.8003,

3813.3340, 4480.0005, 4845.8667, 4154.6670, 7660.7979, 6105.6006,

8647.4639, 7274.6655, 5069.8662, 7296.0020, 4416.0015, 5815.4663,

5248.0005]), 'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0])})

Datasets using transforms

Nest, we create Datasets with transforms. Downloadying fnctions for training and evaluation from https://github.com/pytorch/vision.git

[38]:

%cd /content

!wget https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/utils.py -O utils.py

!wget https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/transforms.py -O transforms.py

!wget https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/engine.py -O engine.py

!wget https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/coco_eval.py -O coco_eval.py

!wget https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/coco_utils.py -O coco_utils.py

/content

--2025-01-22 05:46:17-- https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/utils.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 8388 (8.2K) [text/plain]

Saving to: ‘utils.py’

utils.py 100%[===================>] 8.19K --.-KB/s in 0s

2025-01-22 05:46:17 (69.5 MB/s) - ‘utils.py’ saved [8388/8388]

--2025-01-22 05:46:17-- https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/transforms.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 23337 (23K) [text/plain]

Saving to: ‘transforms.py’

transforms.py 100%[===================>] 22.79K --.-KB/s in 0s

2025-01-22 05:46:17 (130 MB/s) - ‘transforms.py’ saved [23337/23337]

--2025-01-22 05:46:18-- https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/engine.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4032 (3.9K) [text/plain]

Saving to: ‘engine.py’

engine.py 100%[===================>] 3.94K --.-KB/s in 0s

2025-01-22 05:46:18 (69.2 MB/s) - ‘engine.py’ saved [4032/4032]

--2025-01-22 05:46:18-- https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/coco_eval.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 6447 (6.3K) [text/plain]

Saving to: ‘coco_eval.py’

coco_eval.py 100%[===================>] 6.30K --.-KB/s in 0s

2025-01-22 05:46:18 (95.5 MB/s) - ‘coco_eval.py’ saved [6447/6447]

--2025-01-22 05:46:18-- https://raw.githubusercontent.com/pytorch/vision/v0.15.2/references/detection/coco_utils.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 8893 (8.7K) [text/plain]

Saving to: ‘coco_utils.py’

coco_utils.py 100%[===================>] 8.68K --.-KB/s in 0s

2025-01-22 05:46:18 (103 MB/s) - ‘coco_utils.py’ saved [8893/8893]

Define a function for data augmentation using the downloaded refereces/detection functions. Left-right flipping and Zoom out are performed here.

[39]:

from engine import train_one_epoch, evaluate

import utils

import torch.nn as nn

import transforms as T

# torchvision v0.15.2 does not provide ToTensor in transformes, so create your own.

class ToTensor(nn.Module):

def forward(self, image,target):

image = torchvision.transforms.functional.to_tensor(image)

return image, target

def get_transform(train):

transforms = []

# converts the image, a PIL image, into a PyTorch Tensor

transforms.append(ToTensor())

if train:

# during training, randomly flip the training images

# and ground-truth for data augmentation

transforms.append(T.RandomHorizontalFlip(0.5))

transforms.append(T.RandomZoomOut(side_range = (1.0,2.0),p=0.5))

return T.Compose(transforms)

Note: Sizing and normalization of the image data is included in the Faster R-CNN model and is not necessary here.

Creating Datasets and DataLoaders

Creating Datasets and DataLoaders with the first 6 out of 8 images as training data and the last 2 as evaluation data (=test data).

[40]:

# create datasets using differnt transformations

dataset = TunaDataset(images, gt_boxes, get_transform(train=True))

dataset_test = TunaDataset(images, gt_boxes, get_transform(train=False))

# Separate data into training data and evaluation data

torch.manual_seed(1)

indices = torch.randperm(len(dataset)).tolist()

dataset = torch.utils.data.Subset(dataset, indices[2:]) # Note that the taking out order is in reverse order.

dataset_test = torch.utils.data.Subset(dataset_test, indices[:2])

# Create a training data loader and an evaluation data loader

data_loader = torch.utils.data.DataLoader(

dataset, batch_size=2, shuffle=True, num_workers=2,

collate_fn=utils.collate_fn)

data_loader_test = torch.utils.data.DataLoader(

dataset_test, batch_size=1, shuffle=False, num_workers=2,

collate_fn=utils.collate_fn)

7.2. Loading and configuring a Faster R-CNN model

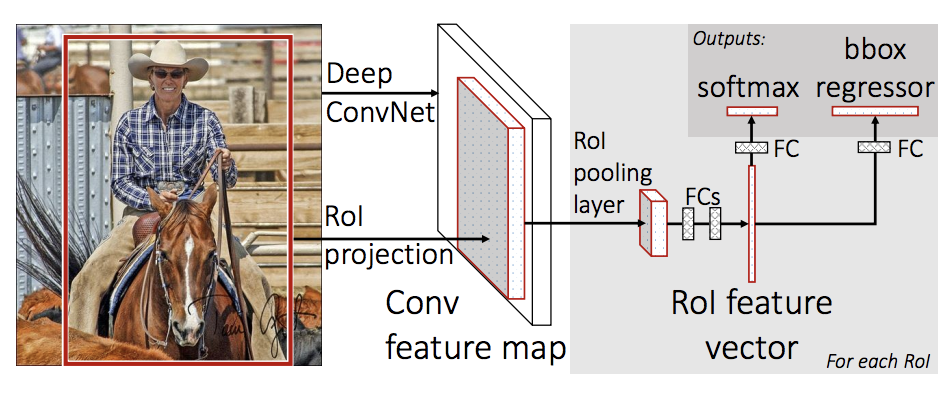

The Faster R-CNN used in this notebook is a model that outputs a set of rectangles in an image that are likely to contain the object being classified and the probability of the object being contained in the rectangle. (For example, the red rectangle in the image below has a 0.9 probability of being a cowboy, a 0.1 probability of being a jockey, etc.).

Here, let’s retrain the model for the tuna dataset previously trained on the COCO dataset. Since the number of classes to be classified is different, the last output layer should be redefined and trained.

[41]:

import torchvision

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

# Loading the pretrained model

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

The model architecture is as follows.

Input

|

(backbone): Residual Network + Feature Pyramid Network

|

(rpn): RegionProposalNetwork: Proposing bounding boxes

|

(roi_heads): RoIHeads:

(box_head): TwoMLPHead: two affine layers

|

(box_predictor): FastRCNNPredictor

|-(cls_score): predicting the class (output 1)

--(bbox_pred): predicting bounding boxes (output 2)

[42]:

model

[42]:

FasterRCNN(

(transform): GeneralizedRCNNTransform(

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

Resize(min_size=(800,), max_size=1333, mode='bilinear')

)

(backbone): BackboneWithFPN(

(body): IntermediateLayerGetter(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): FrozenBatchNorm2d(256, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(64, eps=0.0)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(64, eps=0.0)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(256, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(512, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(128, eps=0.0)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(128, eps=0.0)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(512, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(1024, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(256, eps=0.0)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(256, eps=0.0)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(1024, eps=0.0)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): FrozenBatchNorm2d(2048, eps=0.0)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): FrozenBatchNorm2d(512, eps=0.0)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): FrozenBatchNorm2d(512, eps=0.0)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): FrozenBatchNorm2d(2048, eps=0.0)

(relu): ReLU(inplace=True)

)

)

)

(fpn): FeaturePyramidNetwork(

(inner_blocks): ModuleList(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): Conv2dNormActivation(

(0): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(2): Conv2dNormActivation(

(0): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

)

(3): Conv2dNormActivation(

(0): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer_blocks): ModuleList(

(0-3): 4 x Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

)

(extra_blocks): LastLevelMaxPool()

)

)

(rpn): RegionProposalNetwork(

(anchor_generator): AnchorGenerator()

(head): RPNHead(

(conv): Sequential(

(0): Conv2dNormActivation(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

)

)

(cls_logits): Conv2d(256, 3, kernel_size=(1, 1), stride=(1, 1))

(bbox_pred): Conv2d(256, 12, kernel_size=(1, 1), stride=(1, 1))

)

)

(roi_heads): RoIHeads(

(box_roi_pool): MultiScaleRoIAlign(featmap_names=['0', '1', '2', '3'], output_size=(7, 7), sampling_ratio=2)

(box_head): TwoMLPHead(

(fc6): Linear(in_features=12544, out_features=1024, bias=True)

(fc7): Linear(in_features=1024, out_features=1024, bias=True)

)

(box_predictor): FastRCNNPredictor(

(cls_score): Linear(in_features=1024, out_features=91, bias=True)

(bbox_pred): Linear(in_features=1024, out_features=364, bias=True)

)

)

)

The last (box_predictor) is the output part of the model, called the header.

The output dimension of (cls_score) is the number of predicted classes

(bbox_pred) is the predicted bounding boxes (number of classes x 4)

We rewrite the number of predictor classes in roi_heads.box_predictor to 2.

[43]:

# Number of classes to predict (counting background as well)

num_classes = 2 # 1 class (Tuna) + background

# get number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features #1024

# replace the pre-trained head with a new one

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

[44]:

model.roi_heads.box_predictor

[44]:

FastRCNNPredictor(

(cls_score): Linear(in_features=1024, out_features=2, bias=True)

(bbox_pred): Linear(in_features=1024, out_features=8, bias=True)

)

Enables the use of GPUs and defines the optimization function.

[45]:

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

model.to(device)

# construct an optimizer

params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.Adam(params, lr=0.001, betas=(0.9, 0.999), eps=1e-08, weight_decay=0, amsgrad=False)

# learning rate scheduler which decreases the learning rate by * 0.2 every 20 epochs

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=20,

gamma=0.2)

Training with fixed backbone parameters

The Faster RCNN model consists of three parts: backbone, rpn, and roi_heads.

Fix the parameters of the backbone and do not train it.

[46]:

for parameter in model.backbone.parameters():

parameter.requires_grad=False

Training the model for 60 epochs.

[26]:

# let's train it for 60 epochs

num_epochs = 60

for epoch in range(num_epochs):

# train for one epoch, printing every 10 iterations

train_one_epoch(model, optimizer, data_loader, device, epoch, print_freq=3)

# update the learning rate

lr_scheduler.step()

# evaluate on the test dataset

if (epoch+1) % 10 == 0:

evaluate(model, data_loader_test, device=device)

/content/engine.py:30: FutureWarning: `torch.cuda.amp.autocast(args...)` is deprecated. Please use `torch.amp.autocast('cuda', args...)` instead.

with torch.cuda.amp.autocast(enabled=scaler is not None):

Epoch: [0] [0/3] eta: 0:00:10 lr: 0.000500 loss: 1.8902 (1.8902) loss_classifier: 0.5949 (0.5949) loss_box_reg: 0.8558 (0.8558) loss_objectness: 0.3664 (0.3664) loss_rpn_box_reg: 0.0731 (0.0731) time: 3.6491 data: 0.1697 max mem: 1080

Epoch: [0] [2/3] eta: 0:00:01 lr: 0.001000 loss: 1.8902 (1.8451) loss_classifier: 0.5949 (0.8078) loss_box_reg: 0.6996 (0.7164) loss_objectness: 0.2163 (0.2536) loss_rpn_box_reg: 0.0722 (0.0674) time: 1.4319 data: 0.0638 max mem: 1206

Epoch: [0] Total time: 0:00:04 (1.4423 s / it)

Epoch: [1] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.8244 (0.8244) loss_classifier: 0.2032 (0.2032) loss_box_reg: 0.5002 (0.5002) loss_objectness: 0.0602 (0.0602) loss_rpn_box_reg: 0.0608 (0.0608) time: 0.5173 data: 0.2388 max mem: 1227

Epoch: [1] [2/3] eta: 0:00:00 lr: 0.001000 loss: 1.0763 (1.0429) loss_classifier: 0.3084 (0.2802) loss_box_reg: 0.5706 (0.5516) loss_objectness: 0.1251 (0.1371) loss_rpn_box_reg: 0.0608 (0.0740) time: 0.3628 data: 0.0885 max mem: 1227

Epoch: [1] Total time: 0:00:01 (0.3755 s / it)

Epoch: [2] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.9332 (0.9332) loss_classifier: 0.2537 (0.2537) loss_box_reg: 0.4144 (0.4144) loss_objectness: 0.2111 (0.2111) loss_rpn_box_reg: 0.0540 (0.0540) time: 0.4445 data: 0.1683 max mem: 1227

Epoch: [2] [2/3] eta: 0:00:00 lr: 0.001000 loss: 1.2907 (1.1945) loss_classifier: 0.3852 (0.3543) loss_box_reg: 0.6988 (0.6375) loss_objectness: 0.0847 (0.1209) loss_rpn_box_reg: 0.0692 (0.0818) time: 0.3332 data: 0.0604 max mem: 1227

Epoch: [2] Total time: 0:00:01 (0.3472 s / it)

Epoch: [3] [0/3] eta: 0:00:01 lr: 0.001000 loss: 1.1568 (1.1568) loss_classifier: 0.2954 (0.2954) loss_box_reg: 0.6887 (0.6887) loss_objectness: 0.0618 (0.0618) loss_rpn_box_reg: 0.1108 (0.1108) time: 0.5185 data: 0.2402 max mem: 1227

Epoch: [3] [2/3] eta: 0:00:00 lr: 0.001000 loss: 1.1568 (1.1078) loss_classifier: 0.2954 (0.2858) loss_box_reg: 0.6887 (0.6319) loss_objectness: 0.1080 (0.1109) loss_rpn_box_reg: 0.1007 (0.0792) time: 0.3599 data: 0.0879 max mem: 1227

Epoch: [3] Total time: 0:00:01 (0.3738 s / it)

Epoch: [4] [0/3] eta: 0:00:01 lr: 0.001000 loss: 1.0901 (1.0901) loss_classifier: 0.3181 (0.3181) loss_box_reg: 0.6547 (0.6547) loss_objectness: 0.0668 (0.0668) loss_rpn_box_reg: 0.0505 (0.0505) time: 0.4451 data: 0.1682 max mem: 1227

Epoch: [4] [2/3] eta: 0:00:00 lr: 0.001000 loss: 1.0901 (1.1176) loss_classifier: 0.3181 (0.3182) loss_box_reg: 0.6547 (0.6810) loss_objectness: 0.0443 (0.0483) loss_rpn_box_reg: 0.0505 (0.0701) time: 0.3342 data: 0.0604 max mem: 1227

Epoch: [4] Total time: 0:00:01 (0.3479 s / it)

Epoch: [5] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.9763 (0.9763) loss_classifier: 0.3232 (0.3232) loss_box_reg: 0.5184 (0.5184) loss_objectness: 0.0649 (0.0649) loss_rpn_box_reg: 0.0698 (0.0698) time: 0.4878 data: 0.2035 max mem: 1227

Epoch: [5] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.9785 (1.0504) loss_classifier: 0.3094 (0.2981) loss_box_reg: 0.5665 (0.6149) loss_objectness: 0.0649 (0.0713) loss_rpn_box_reg: 0.0696 (0.0660) time: 0.3528 data: 0.0764 max mem: 1229

Epoch: [5] Total time: 0:00:01 (0.3656 s / it)

Epoch: [6] [0/3] eta: 0:00:01 lr: 0.001000 loss: 1.0587 (1.0587) loss_classifier: 0.3190 (0.3190) loss_box_reg: 0.6667 (0.6667) loss_objectness: 0.0226 (0.0226) loss_rpn_box_reg: 0.0505 (0.0505) time: 0.4497 data: 0.1610 max mem: 1229

Epoch: [6] [2/3] eta: 0:00:00 lr: 0.001000 loss: 1.0587 (0.9518) loss_classifier: 0.3102 (0.2870) loss_box_reg: 0.6667 (0.5828) loss_objectness: 0.0226 (0.0388) loss_rpn_box_reg: 0.0505 (0.0431) time: 0.3406 data: 0.0613 max mem: 1229

Epoch: [6] Total time: 0:00:01 (0.3531 s / it)

Epoch: [7] [0/3] eta: 0:00:01 lr: 0.001000 loss: 1.0202 (1.0202) loss_classifier: 0.2977 (0.2977) loss_box_reg: 0.6502 (0.6502) loss_objectness: 0.0235 (0.0235) loss_rpn_box_reg: 0.0488 (0.0488) time: 0.4964 data: 0.2107 max mem: 1229

Epoch: [7] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.9203 (0.9387) loss_classifier: 0.2828 (0.2819) loss_box_reg: 0.5471 (0.5808) loss_objectness: 0.0235 (0.0316) loss_rpn_box_reg: 0.0453 (0.0444) time: 0.3521 data: 0.0765 max mem: 1229

Epoch: [7] Total time: 0:00:01 (0.3651 s / it)

Epoch: [8] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.9887 (0.9887) loss_classifier: 0.2585 (0.2585) loss_box_reg: 0.6257 (0.6257) loss_objectness: 0.0181 (0.0181) loss_rpn_box_reg: 0.0864 (0.0864) time: 0.4385 data: 0.1531 max mem: 1229

Epoch: [8] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.8732 (0.8746) loss_classifier: 0.2528 (0.2499) loss_box_reg: 0.5375 (0.5476) loss_objectness: 0.0181 (0.0306) loss_rpn_box_reg: 0.0288 (0.0465) time: 0.3343 data: 0.0586 max mem: 1229

Epoch: [8] Total time: 0:00:01 (0.3474 s / it)

Epoch: [9] [0/3] eta: 0:00:01 lr: 0.001000 loss: 1.0244 (1.0244) loss_classifier: 0.2691 (0.2691) loss_box_reg: 0.6695 (0.6695) loss_objectness: 0.0098 (0.0098) loss_rpn_box_reg: 0.0760 (0.0760) time: 0.4352 data: 0.1578 max mem: 1229

Epoch: [9] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.9754 (0.8399) loss_classifier: 0.2691 (0.2463) loss_box_reg: 0.6156 (0.5294) loss_objectness: 0.0154 (0.0178) loss_rpn_box_reg: 0.0490 (0.0464) time: 0.3346 data: 0.0589 max mem: 1229

Epoch: [9] Total time: 0:00:01 (0.3558 s / it)

creating index...

index created!

Test: [0/2] eta: 0:00:00 model_time: 0.1457 (0.1457) evaluator_time: 0.0793 (0.0793) time: 0.3673 data: 0.1389 max mem: 1229

Test: [1/2] eta: 0:00:00 model_time: 0.1108 (0.1283) evaluator_time: 0.0699 (0.0746) time: 0.2777 data: 0.0717 max mem: 1229

Test: Total time: 0:00:00 (0.3089 s / it)

Averaged stats: model_time: 0.1108 (0.1283) evaluator_time: 0.0699 (0.0746)

Accumulating evaluation results...

DONE (t=0.01s).

IoU metric: bbox

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.192

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.495

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.127

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.212

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.259

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.017

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.127

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.362

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.351

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.533

Epoch: [10] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.7365 (0.7365) loss_classifier: 0.2058 (0.2058) loss_box_reg: 0.4802 (0.4802) loss_objectness: 0.0237 (0.0237) loss_rpn_box_reg: 0.0268 (0.0268) time: 0.5833 data: 0.2989 max mem: 1229

Epoch: [10] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.8215 (0.8197) loss_classifier: 0.2505 (0.2417) loss_box_reg: 0.4828 (0.5104) loss_objectness: 0.0237 (0.0259) loss_rpn_box_reg: 0.0402 (0.0417) time: 0.3920 data: 0.1095 max mem: 1229

Epoch: [10] Total time: 0:00:01 (0.4121 s / it)

Epoch: [11] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.9869 (0.9869) loss_classifier: 0.2965 (0.2965) loss_box_reg: 0.6094 (0.6094) loss_objectness: 0.0216 (0.0216) loss_rpn_box_reg: 0.0595 (0.0595) time: 0.5604 data: 0.2807 max mem: 1229

Epoch: [11] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.8405 (0.7693) loss_classifier: 0.2176 (0.2239) loss_box_reg: 0.5587 (0.4852) loss_objectness: 0.0216 (0.0235) loss_rpn_box_reg: 0.0370 (0.0367) time: 0.3780 data: 0.1010 max mem: 1229

Epoch: [11] Total time: 0:00:01 (0.3914 s / it)

Epoch: [12] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.9518 (0.9518) loss_classifier: 0.2963 (0.2963) loss_box_reg: 0.6003 (0.6003) loss_objectness: 0.0071 (0.0071) loss_rpn_box_reg: 0.0481 (0.0481) time: 0.4466 data: 0.1647 max mem: 1229

Epoch: [12] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.7904 (0.7366) loss_classifier: 0.2150 (0.2177) loss_box_reg: 0.5211 (0.4717) loss_objectness: 0.0071 (0.0112) loss_rpn_box_reg: 0.0481 (0.0361) time: 0.3342 data: 0.0594 max mem: 1229

Epoch: [12] Total time: 0:00:01 (0.3472 s / it)

Epoch: [13] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.8051 (0.8051) loss_classifier: 0.2494 (0.2494) loss_box_reg: 0.4565 (0.4565) loss_objectness: 0.0586 (0.0586) loss_rpn_box_reg: 0.0406 (0.0406) time: 0.4817 data: 0.1978 max mem: 1229

Epoch: [13] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.8051 (0.7961) loss_classifier: 0.2494 (0.2369) loss_box_reg: 0.5011 (0.4872) loss_objectness: 0.0268 (0.0363) loss_rpn_box_reg: 0.0349 (0.0357) time: 0.3461 data: 0.0718 max mem: 1229

Epoch: [13] Total time: 0:00:01 (0.3599 s / it)

Epoch: [14] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.7793 (0.7793) loss_classifier: 0.2161 (0.2161) loss_box_reg: 0.4290 (0.4290) loss_objectness: 0.1121 (0.1121) loss_rpn_box_reg: 0.0221 (0.0221) time: 0.4840 data: 0.1951 max mem: 1229

Epoch: [14] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.7793 (0.7687) loss_classifier: 0.2161 (0.2209) loss_box_reg: 0.4336 (0.4639) loss_objectness: 0.0272 (0.0484) loss_rpn_box_reg: 0.0246 (0.0354) time: 0.3485 data: 0.0702 max mem: 1229

Epoch: [14] Total time: 0:00:01 (0.3619 s / it)

Epoch: [15] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.8790 (0.8790) loss_classifier: 0.2291 (0.2291) loss_box_reg: 0.5508 (0.5508) loss_objectness: 0.0379 (0.0379) loss_rpn_box_reg: 0.0612 (0.0612) time: 0.4715 data: 0.1911 max mem: 1229

Epoch: [15] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.7833 (0.7218) loss_classifier: 0.1974 (0.1948) loss_box_reg: 0.5091 (0.4547) loss_objectness: 0.0379 (0.0338) loss_rpn_box_reg: 0.0372 (0.0384) time: 0.3469 data: 0.0723 max mem: 1229

Epoch: [15] Total time: 0:00:01 (0.3609 s / it)

Epoch: [16] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.7364 (0.7364) loss_classifier: 0.2080 (0.2080) loss_box_reg: 0.4769 (0.4769) loss_objectness: 0.0258 (0.0258) loss_rpn_box_reg: 0.0257 (0.0257) time: 0.4415 data: 0.1553 max mem: 1229

Epoch: [16] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.6977 (0.6790) loss_classifier: 0.1807 (0.1832) loss_box_reg: 0.4671 (0.4407) loss_objectness: 0.0202 (0.0217) loss_rpn_box_reg: 0.0308 (0.0334) time: 0.3357 data: 0.0597 max mem: 1229

Epoch: [16] Total time: 0:00:01 (0.3499 s / it)

Epoch: [17] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.7422 (0.7422) loss_classifier: 0.1905 (0.1905) loss_box_reg: 0.5147 (0.5147) loss_objectness: 0.0153 (0.0153) loss_rpn_box_reg: 0.0218 (0.0218) time: 0.4396 data: 0.1594 max mem: 1229

Epoch: [17] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.6315 (0.6534) loss_classifier: 0.1869 (0.1850) loss_box_reg: 0.3986 (0.4201) loss_objectness: 0.0153 (0.0149) loss_rpn_box_reg: 0.0238 (0.0334) time: 0.3367 data: 0.0595 max mem: 1229

Epoch: [17] Total time: 0:00:01 (0.3630 s / it)

Epoch: [18] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.5843 (0.5843) loss_classifier: 0.1814 (0.1814) loss_box_reg: 0.3520 (0.3520) loss_objectness: 0.0247 (0.0247) loss_rpn_box_reg: 0.0263 (0.0263) time: 0.6021 data: 0.3093 max mem: 1229

Epoch: [18] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.6493 (0.6501) loss_classifier: 0.1980 (0.1931) loss_box_reg: 0.4117 (0.4128) loss_objectness: 0.0154 (0.0146) loss_rpn_box_reg: 0.0263 (0.0295) time: 0.3890 data: 0.1090 max mem: 1229

Epoch: [18] Total time: 0:00:01 (0.4035 s / it)

Epoch: [19] [0/3] eta: 0:00:01 lr: 0.001000 loss: 0.7670 (0.7670) loss_classifier: 0.2409 (0.2409) loss_box_reg: 0.4776 (0.4776) loss_objectness: 0.0189 (0.0189) loss_rpn_box_reg: 0.0296 (0.0296) time: 0.4333 data: 0.1542 max mem: 1229

Epoch: [19] [2/3] eta: 0:00:00 lr: 0.001000 loss: 0.5790 (0.6416) loss_classifier: 0.1520 (0.1754) loss_box_reg: 0.3947 (0.4216) loss_objectness: 0.0189 (0.0230) loss_rpn_box_reg: 0.0221 (0.0215) time: 0.3304 data: 0.0556 max mem: 1229

Epoch: [19] Total time: 0:00:01 (0.3437 s / it)

creating index...

index created!

Test: [0/2] eta: 0:00:00 model_time: 0.1138 (0.1138) evaluator_time: 0.0222 (0.0222) time: 0.2286 data: 0.0892 max mem: 1229

Test: [1/2] eta: 0:00:00 model_time: 0.1125 (0.1131) evaluator_time: 0.0222 (0.0299) time: 0.1933 data: 0.0472 max mem: 1229

Test: Total time: 0:00:00 (0.2165 s / it)

Averaged stats: model_time: 0.1125 (0.1131) evaluator_time: 0.0222 (0.0299)

Accumulating evaluation results...

DONE (t=0.01s).

IoU metric: bbox

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.162

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.486

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.022

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.169

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.429

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.019

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.127

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.327

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.309

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.600

Epoch: [20] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.7972 (0.7972) loss_classifier: 0.2144 (0.2144) loss_box_reg: 0.4914 (0.4914) loss_objectness: 0.0168 (0.0168) loss_rpn_box_reg: 0.0746 (0.0746) time: 0.6242 data: 0.3028 max mem: 1229

Epoch: [20] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.7920 (0.6892) loss_classifier: 0.2144 (0.2046) loss_box_reg: 0.4462 (0.4137) loss_objectness: 0.0386 (0.0315) loss_rpn_box_reg: 0.0305 (0.0393) time: 0.4049 data: 0.1134 max mem: 1229

Epoch: [20] Total time: 0:00:01 (0.4285 s / it)

Epoch: [21] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.5600 (0.5600) loss_classifier: 0.1859 (0.1859) loss_box_reg: 0.3341 (0.3341) loss_objectness: 0.0176 (0.0176) loss_rpn_box_reg: 0.0224 (0.0224) time: 0.5890 data: 0.2837 max mem: 1229

Epoch: [21] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5656 (0.5958) loss_classifier: 0.1823 (0.1790) loss_box_reg: 0.3359 (0.3705) loss_objectness: 0.0174 (0.0169) loss_rpn_box_reg: 0.0317 (0.0294) time: 0.3900 data: 0.1015 max mem: 1229

Epoch: [21] Total time: 0:00:01 (0.4166 s / it)

Epoch: [22] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.6212 (0.6212) loss_classifier: 0.2074 (0.2074) loss_box_reg: 0.3868 (0.3868) loss_objectness: 0.0057 (0.0057) loss_rpn_box_reg: 0.0213 (0.0213) time: 0.5261 data: 0.2464 max mem: 1229

Epoch: [22] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.6212 (0.5568) loss_classifier: 0.1984 (0.1714) loss_box_reg: 0.3868 (0.3518) loss_objectness: 0.0115 (0.0158) loss_rpn_box_reg: 0.0213 (0.0178) time: 0.3614 data: 0.0872 max mem: 1229

Epoch: [22] Total time: 0:00:01 (0.3737 s / it)

Epoch: [23] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.7325 (0.7325) loss_classifier: 0.2596 (0.2596) loss_box_reg: 0.4308 (0.4308) loss_objectness: 0.0097 (0.0097) loss_rpn_box_reg: 0.0323 (0.0323) time: 0.5438 data: 0.2588 max mem: 1237

Epoch: [23] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.6265 (0.6230) loss_classifier: 0.1891 (0.1958) loss_box_reg: 0.3961 (0.3889) loss_objectness: 0.0097 (0.0111) loss_rpn_box_reg: 0.0273 (0.0272) time: 0.3745 data: 0.0951 max mem: 1237

Epoch: [23] Total time: 0:00:01 (0.3878 s / it)

Epoch: [24] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.4957 (0.4957) loss_classifier: 0.1469 (0.1469) loss_box_reg: 0.3258 (0.3258) loss_objectness: 0.0061 (0.0061) loss_rpn_box_reg: 0.0168 (0.0168) time: 0.4337 data: 0.1553 max mem: 1237

Epoch: [24] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5271 (0.6090) loss_classifier: 0.1688 (0.1813) loss_box_reg: 0.3264 (0.3816) loss_objectness: 0.0078 (0.0167) loss_rpn_box_reg: 0.0241 (0.0294) time: 0.3324 data: 0.0562 max mem: 1237

Epoch: [24] Total time: 0:00:01 (0.3459 s / it)

Epoch: [25] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.4198 (0.4198) loss_classifier: 0.1238 (0.1238) loss_box_reg: 0.2560 (0.2560) loss_objectness: 0.0204 (0.0204) loss_rpn_box_reg: 0.0195 (0.0195) time: 0.4338 data: 0.1520 max mem: 1237

Epoch: [25] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5124 (0.5617) loss_classifier: 0.1688 (0.1849) loss_box_reg: 0.3099 (0.3427) loss_objectness: 0.0167 (0.0145) loss_rpn_box_reg: 0.0195 (0.0196) time: 0.3344 data: 0.0576 max mem: 1237

Epoch: [25] Total time: 0:00:01 (0.3479 s / it)

Epoch: [26] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.5882 (0.5882) loss_classifier: 0.1748 (0.1748) loss_box_reg: 0.3733 (0.3733) loss_objectness: 0.0100 (0.0100) loss_rpn_box_reg: 0.0301 (0.0301) time: 0.4719 data: 0.1889 max mem: 1237

Epoch: [26] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5882 (0.5756) loss_classifier: 0.1748 (0.1774) loss_box_reg: 0.3733 (0.3542) loss_objectness: 0.0153 (0.0155) loss_rpn_box_reg: 0.0301 (0.0286) time: 0.3437 data: 0.0682 max mem: 1237

Epoch: [26] Total time: 0:00:01 (0.3581 s / it)

Epoch: [27] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.4331 (0.4331) loss_classifier: 0.1291 (0.1291) loss_box_reg: 0.2540 (0.2540) loss_objectness: 0.0200 (0.0200) loss_rpn_box_reg: 0.0300 (0.0300) time: 0.4378 data: 0.1598 max mem: 1237

Epoch: [27] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5343 (0.5311) loss_classifier: 0.1522 (0.1524) loss_box_reg: 0.3354 (0.3280) loss_objectness: 0.0200 (0.0242) loss_rpn_box_reg: 0.0297 (0.0266) time: 0.3314 data: 0.0575 max mem: 1237

Epoch: [27] Total time: 0:00:01 (0.3453 s / it)

Epoch: [28] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.3621 (0.3621) loss_classifier: 0.1114 (0.1114) loss_box_reg: 0.2179 (0.2179) loss_objectness: 0.0064 (0.0064) loss_rpn_box_reg: 0.0264 (0.0264) time: 0.4696 data: 0.1881 max mem: 1237

Epoch: [28] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.6419 (0.5496) loss_classifier: 0.1833 (0.1612) loss_box_reg: 0.3990 (0.3418) loss_objectness: 0.0176 (0.0159) loss_rpn_box_reg: 0.0292 (0.0306) time: 0.3461 data: 0.0687 max mem: 1237

Epoch: [28] Total time: 0:00:01 (0.3599 s / it)

Epoch: [29] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.7010 (0.7010) loss_classifier: 0.2021 (0.2021) loss_box_reg: 0.4513 (0.4513) loss_objectness: 0.0088 (0.0088) loss_rpn_box_reg: 0.0388 (0.0388) time: 0.4849 data: 0.1949 max mem: 1237

Epoch: [29] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.7010 (0.5999) loss_classifier: 0.2021 (0.1793) loss_box_reg: 0.4513 (0.3833) loss_objectness: 0.0088 (0.0071) loss_rpn_box_reg: 0.0340 (0.0301) time: 0.3545 data: 0.0744 max mem: 1237

Epoch: [29] Total time: 0:00:01 (0.3676 s / it)

creating index...

index created!

Test: [0/2] eta: 0:00:00 model_time: 0.1136 (0.1136) evaluator_time: 0.0246 (0.0246) time: 0.2247 data: 0.0830 max mem: 1237

Test: [1/2] eta: 0:00:00 model_time: 0.1093 (0.1115) evaluator_time: 0.0246 (0.0247) time: 0.1839 data: 0.0446 max mem: 1237

Test: Total time: 0:00:00 (0.2018 s / it)

Averaged stats: model_time: 0.1093 (0.1115) evaluator_time: 0.0246 (0.0247)

Accumulating evaluation results...

DONE (t=0.00s).

IoU metric: bbox

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.267

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.599

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.186

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.259

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.550

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.017

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.169

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.421

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.404

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.667

Epoch: [30] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.6413 (0.6413) loss_classifier: 0.2077 (0.2077) loss_box_reg: 0.4060 (0.4060) loss_objectness: 0.0085 (0.0085) loss_rpn_box_reg: 0.0191 (0.0191) time: 0.4280 data: 0.1448 max mem: 1237

Epoch: [30] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.6413 (0.5688) loss_classifier: 0.2077 (0.1876) loss_box_reg: 0.4060 (0.3426) loss_objectness: 0.0151 (0.0135) loss_rpn_box_reg: 0.0191 (0.0252) time: 0.3331 data: 0.0543 max mem: 1238

Epoch: [30] Total time: 0:00:01 (0.3494 s / it)

Epoch: [31] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.6128 (0.6128) loss_classifier: 0.1956 (0.1956) loss_box_reg: 0.3813 (0.3813) loss_objectness: 0.0108 (0.0108) loss_rpn_box_reg: 0.0251 (0.0251) time: 0.5254 data: 0.2264 max mem: 1238

Epoch: [31] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5440 (0.5112) loss_classifier: 0.1631 (0.1527) loss_box_reg: 0.3529 (0.3252) loss_objectness: 0.0108 (0.0124) loss_rpn_box_reg: 0.0214 (0.0209) time: 0.3662 data: 0.0837 max mem: 1238

Epoch: [31] Total time: 0:00:01 (0.3918 s / it)

Epoch: [32] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.5958 (0.5958) loss_classifier: 0.1851 (0.1851) loss_box_reg: 0.3799 (0.3799) loss_objectness: 0.0098 (0.0098) loss_rpn_box_reg: 0.0210 (0.0210) time: 0.5763 data: 0.2368 max mem: 1238

Epoch: [32] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5883 (0.5623) loss_classifier: 0.1851 (0.1731) loss_box_reg: 0.3563 (0.3558) loss_objectness: 0.0098 (0.0089) loss_rpn_box_reg: 0.0210 (0.0246) time: 0.3983 data: 0.0906 max mem: 1238

Epoch: [32] Total time: 0:00:01 (0.4274 s / it)

Epoch: [33] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.5317 (0.5317) loss_classifier: 0.1647 (0.1647) loss_box_reg: 0.3355 (0.3355) loss_objectness: 0.0126 (0.0126) loss_rpn_box_reg: 0.0189 (0.0189) time: 0.6093 data: 0.2953 max mem: 1238

Epoch: [33] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5076 (0.4714) loss_classifier: 0.1508 (0.1391) loss_box_reg: 0.3196 (0.3012) loss_objectness: 0.0126 (0.0108) loss_rpn_box_reg: 0.0189 (0.0203) time: 0.4013 data: 0.1095 max mem: 1238

Epoch: [33] Total time: 0:00:01 (0.4309 s / it)

Epoch: [34] [0/3] eta: 0:00:02 lr: 0.000200 loss: 0.5235 (0.5235) loss_classifier: 0.1646 (0.1646) loss_box_reg: 0.3169 (0.3169) loss_objectness: 0.0100 (0.0100) loss_rpn_box_reg: 0.0319 (0.0319) time: 0.7185 data: 0.4121 max mem: 1238

Epoch: [34] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5609 (0.5615) loss_classifier: 0.1722 (0.1785) loss_box_reg: 0.3561 (0.3434) loss_objectness: 0.0154 (0.0147) loss_rpn_box_reg: 0.0255 (0.0249) time: 0.4377 data: 0.1462 max mem: 1238

Epoch: [34] Total time: 0:00:01 (0.4608 s / it)

Epoch: [35] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.4128 (0.4128) loss_classifier: 0.1226 (0.1226) loss_box_reg: 0.2733 (0.2733) loss_objectness: 0.0071 (0.0071) loss_rpn_box_reg: 0.0098 (0.0098) time: 0.4955 data: 0.2121 max mem: 1238

Epoch: [35] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.4128 (0.5081) loss_classifier: 0.1226 (0.1548) loss_box_reg: 0.2733 (0.3266) loss_objectness: 0.0071 (0.0067) loss_rpn_box_reg: 0.0144 (0.0200) time: 0.3541 data: 0.0760 max mem: 1238

Epoch: [35] Total time: 0:00:01 (0.3672 s / it)

Epoch: [36] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.4695 (0.4695) loss_classifier: 0.1668 (0.1668) loss_box_reg: 0.2726 (0.2726) loss_objectness: 0.0039 (0.0039) loss_rpn_box_reg: 0.0261 (0.0261) time: 0.4676 data: 0.1824 max mem: 1238

Epoch: [36] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.4752 (0.5531) loss_classifier: 0.1668 (0.1875) loss_box_reg: 0.2876 (0.3293) loss_objectness: 0.0039 (0.0106) loss_rpn_box_reg: 0.0261 (0.0257) time: 0.3444 data: 0.0673 max mem: 1238

Epoch: [36] Total time: 0:00:01 (0.3568 s / it)

Epoch: [37] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.4593 (0.4593) loss_classifier: 0.1449 (0.1449) loss_box_reg: 0.2867 (0.2867) loss_objectness: 0.0109 (0.0109) loss_rpn_box_reg: 0.0167 (0.0167) time: 0.4213 data: 0.1445 max mem: 1238

Epoch: [37] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.4593 (0.4766) loss_classifier: 0.1449 (0.1466) loss_box_reg: 0.2867 (0.3001) loss_objectness: 0.0109 (0.0093) loss_rpn_box_reg: 0.0167 (0.0205) time: 0.3289 data: 0.0552 max mem: 1238

Epoch: [37] Total time: 0:00:01 (0.3418 s / it)

Epoch: [38] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.6817 (0.6817) loss_classifier: 0.2169 (0.2169) loss_box_reg: 0.4037 (0.4037) loss_objectness: 0.0213 (0.0213) loss_rpn_box_reg: 0.0397 (0.0397) time: 0.4610 data: 0.1762 max mem: 1238

Epoch: [38] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.4829 (0.5004) loss_classifier: 0.1554 (0.1566) loss_box_reg: 0.2870 (0.3032) loss_objectness: 0.0213 (0.0172) loss_rpn_box_reg: 0.0186 (0.0233) time: 0.3413 data: 0.0634 max mem: 1238

Epoch: [38] Total time: 0:00:01 (0.3546 s / it)

Epoch: [39] [0/3] eta: 0:00:01 lr: 0.000200 loss: 0.5351 (0.5351) loss_classifier: 0.1620 (0.1620) loss_box_reg: 0.3342 (0.3342) loss_objectness: 0.0063 (0.0063) loss_rpn_box_reg: 0.0327 (0.0327) time: 0.4904 data: 0.2083 max mem: 1238

Epoch: [39] [2/3] eta: 0:00:00 lr: 0.000200 loss: 0.5351 (0.5223) loss_classifier: 0.1532 (0.1531) loss_box_reg: 0.3342 (0.3373) loss_objectness: 0.0055 (0.0049) loss_rpn_box_reg: 0.0310 (0.0271) time: 0.3547 data: 0.0758 max mem: 1238

Epoch: [39] Total time: 0:00:01 (0.3683 s / it)

creating index...

index created!

Test: [0/2] eta: 0:00:00 model_time: 0.1160 (0.1160) evaluator_time: 0.0220 (0.0220) time: 0.2289 data: 0.0871 max mem: 1238

Test: [1/2] eta: 0:00:00 model_time: 0.1117 (0.1139) evaluator_time: 0.0220 (0.0226) time: 0.1865 data: 0.0467 max mem: 1238

Test: Total time: 0:00:00 (0.2046 s / it)

Averaged stats: model_time: 0.1117 (0.1139) evaluator_time: 0.0220 (0.0226)

Accumulating evaluation results...

DONE (t=0.00s).

IoU metric: bbox

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.240

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.587

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.130

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.233

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.545

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.023

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.171

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.383

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.371

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.567

Epoch: [40] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.6574 (0.6574) loss_classifier: 0.2129 (0.2129) loss_box_reg: 0.4124 (0.4124) loss_objectness: 0.0031 (0.0031) loss_rpn_box_reg: 0.0291 (0.0291) time: 0.4781 data: 0.1942 max mem: 1238

Epoch: [40] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.3934 (0.4812) loss_classifier: 0.1219 (0.1484) loss_box_reg: 0.2541 (0.3058) loss_objectness: 0.0058 (0.0064) loss_rpn_box_reg: 0.0184 (0.0206) time: 0.3471 data: 0.0700 max mem: 1238

Epoch: [40] Total time: 0:00:01 (0.3602 s / it)

Epoch: [41] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.7990 (0.7990) loss_classifier: 0.2617 (0.2617) loss_box_reg: 0.4865 (0.4865) loss_objectness: 0.0125 (0.0125) loss_rpn_box_reg: 0.0383 (0.0383) time: 0.5432 data: 0.2521 max mem: 1238

Epoch: [41] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4659 (0.5342) loss_classifier: 0.1314 (0.1646) loss_box_reg: 0.2924 (0.3297) loss_objectness: 0.0160 (0.0154) loss_rpn_box_reg: 0.0260 (0.0244) time: 0.3713 data: 0.0916 max mem: 1238

Epoch: [41] Total time: 0:00:01 (0.3843 s / it)

Epoch: [42] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.5306 (0.5306) loss_classifier: 0.1665 (0.1665) loss_box_reg: 0.3368 (0.3368) loss_objectness: 0.0046 (0.0046) loss_rpn_box_reg: 0.0228 (0.0228) time: 0.4605 data: 0.1745 max mem: 1238

Epoch: [42] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4387 (0.4577) loss_classifier: 0.1368 (0.1441) loss_box_reg: 0.2815 (0.2881) loss_objectness: 0.0071 (0.0066) loss_rpn_box_reg: 0.0211 (0.0188) time: 0.3449 data: 0.0638 max mem: 1238

Epoch: [42] Total time: 0:00:01 (0.3682 s / it)

Epoch: [43] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.6965 (0.6965) loss_classifier: 0.2337 (0.2337) loss_box_reg: 0.4102 (0.4102) loss_objectness: 0.0187 (0.0187) loss_rpn_box_reg: 0.0339 (0.0339) time: 0.5506 data: 0.2600 max mem: 1238

Epoch: [43] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.3886 (0.4898) loss_classifier: 0.1143 (0.1526) loss_box_reg: 0.2337 (0.2924) loss_objectness: 0.0242 (0.0227) loss_rpn_box_reg: 0.0165 (0.0220) time: 0.3762 data: 0.0934 max mem: 1238

Epoch: [43] Total time: 0:00:01 (0.3957 s / it)

Epoch: [44] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.4588 (0.4588) loss_classifier: 0.1400 (0.1400) loss_box_reg: 0.2912 (0.2912) loss_objectness: 0.0097 (0.0097) loss_rpn_box_reg: 0.0179 (0.0179) time: 0.5428 data: 0.2385 max mem: 1238

Epoch: [44] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4701 (0.4935) loss_classifier: 0.1447 (0.1449) loss_box_reg: 0.2923 (0.3195) loss_objectness: 0.0097 (0.0076) loss_rpn_box_reg: 0.0230 (0.0215) time: 0.3821 data: 0.0905 max mem: 1238

Epoch: [44] Total time: 0:00:01 (0.4056 s / it)

Epoch: [45] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.5754 (0.5754) loss_classifier: 0.1756 (0.1756) loss_box_reg: 0.3672 (0.3672) loss_objectness: 0.0118 (0.0118) loss_rpn_box_reg: 0.0209 (0.0209) time: 0.5397 data: 0.2566 max mem: 1238

Epoch: [45] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4711 (0.4494) loss_classifier: 0.1470 (0.1391) loss_box_reg: 0.2865 (0.2784) loss_objectness: 0.0118 (0.0148) loss_rpn_box_reg: 0.0172 (0.0171) time: 0.3687 data: 0.0907 max mem: 1238

Epoch: [45] Total time: 0:00:01 (0.3802 s / it)

Epoch: [46] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.5908 (0.5908) loss_classifier: 0.1661 (0.1661) loss_box_reg: 0.3840 (0.3840) loss_objectness: 0.0183 (0.0183) loss_rpn_box_reg: 0.0224 (0.0224) time: 0.4726 data: 0.1859 max mem: 1238

Epoch: [46] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4913 (0.5042) loss_classifier: 0.1642 (0.1548) loss_box_reg: 0.3021 (0.3180) loss_objectness: 0.0078 (0.0090) loss_rpn_box_reg: 0.0224 (0.0224) time: 0.3471 data: 0.0678 max mem: 1238

Epoch: [46] Total time: 0:00:01 (0.3613 s / it)

Epoch: [47] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.3532 (0.3532) loss_classifier: 0.0960 (0.0960) loss_box_reg: 0.2351 (0.2351) loss_objectness: 0.0024 (0.0024) loss_rpn_box_reg: 0.0196 (0.0196) time: 0.4486 data: 0.1670 max mem: 1238

Epoch: [47] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.5930 (0.5247) loss_classifier: 0.1881 (0.1587) loss_box_reg: 0.3605 (0.3308) loss_objectness: 0.0093 (0.0110) loss_rpn_box_reg: 0.0232 (0.0243) time: 0.3459 data: 0.0653 max mem: 1238

Epoch: [47] Total time: 0:00:01 (0.3596 s / it)

Epoch: [48] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.4339 (0.4339) loss_classifier: 0.1421 (0.1421) loss_box_reg: 0.2744 (0.2744) loss_objectness: 0.0028 (0.0028) loss_rpn_box_reg: 0.0145 (0.0145) time: 0.4299 data: 0.1482 max mem: 1238

Epoch: [48] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4339 (0.4410) loss_classifier: 0.1421 (0.1397) loss_box_reg: 0.2744 (0.2752) loss_objectness: 0.0049 (0.0091) loss_rpn_box_reg: 0.0154 (0.0170) time: 0.3319 data: 0.0539 max mem: 1238

Epoch: [48] Total time: 0:00:01 (0.3458 s / it)

Epoch: [49] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.5952 (0.5952) loss_classifier: 0.1497 (0.1497) loss_box_reg: 0.3691 (0.3691) loss_objectness: 0.0265 (0.0265) loss_rpn_box_reg: 0.0500 (0.0500) time: 0.5417 data: 0.2492 max mem: 1238

Epoch: [49] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.5952 (0.5496) loss_classifier: 0.1497 (0.1625) loss_box_reg: 0.3691 (0.3399) loss_objectness: 0.0116 (0.0160) loss_rpn_box_reg: 0.0334 (0.0311) time: 0.3749 data: 0.0916 max mem: 1238

Epoch: [49] Total time: 0:00:01 (0.3879 s / it)

creating index...

index created!

Test: [0/2] eta: 0:00:00 model_time: 0.1185 (0.1185) evaluator_time: 0.0211 (0.0211) time: 0.2301 data: 0.0871 max mem: 1238

Test: [1/2] eta: 0:00:00 model_time: 0.1130 (0.1158) evaluator_time: 0.0211 (0.0228) time: 0.1883 data: 0.0466 max mem: 1238

Test: Total time: 0:00:00 (0.2060 s / it)

Averaged stats: model_time: 0.1130 (0.1158) evaluator_time: 0.0211 (0.0228)

Accumulating evaluation results...

DONE (t=0.00s).

IoU metric: bbox

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.230

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.545

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.126

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.226

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.534

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.019

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.162

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.385

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.376

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.533

Epoch: [50] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.4961 (0.4961) loss_classifier: 0.1517 (0.1517) loss_box_reg: 0.2979 (0.2979) loss_objectness: 0.0171 (0.0171) loss_rpn_box_reg: 0.0293 (0.0293) time: 0.4946 data: 0.2104 max mem: 1238

Epoch: [50] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4961 (0.4826) loss_classifier: 0.1517 (0.1463) loss_box_reg: 0.2979 (0.2976) loss_objectness: 0.0132 (0.0144) loss_rpn_box_reg: 0.0229 (0.0243) time: 0.3575 data: 0.0778 max mem: 1238

Epoch: [50] Total time: 0:00:01 (0.3707 s / it)

Epoch: [51] [0/3] eta: 0:00:01 lr: 0.000040 loss: 0.4982 (0.4982) loss_classifier: 0.1735 (0.1735) loss_box_reg: 0.2870 (0.2870) loss_objectness: 0.0233 (0.0233) loss_rpn_box_reg: 0.0144 (0.0144) time: 0.4373 data: 0.1513 max mem: 1238

Epoch: [51] [2/3] eta: 0:00:00 lr: 0.000040 loss: 0.4594 (0.4574) loss_classifier: 0.1478 (0.1458) loss_box_reg: 0.2870 (0.2729) loss_objectness: 0.0164 (0.0173) loss_rpn_box_reg: 0.0144 (0.0214) time: 0.3351 data: 0.0555 max mem: 1238

Epoch: [51] Total time: 0:00:01 (0.3479 s / it)